OpenAI recently announced GPT-4o, its new flagship model capable of reasoning across audio, vision, and text in real time. This model, named “o” for “omni,” represents a significant advancement in natural human-computer interaction.

What is GPT-4o?

GPT-4o is an AI model that accepts any combination of text, audio, and image as input and generates any combination of these types of output. It can respond to audio inputs in just 232 milliseconds, with an average of 320 milliseconds, similar to human response times in a conversation. Compared to GPT-4 Turbo, GPT-4o offers similar performance in English text and coding but with significant improvements in other languages, as well as being faster and 50% cheaper in the API. Its vision and audio understanding capabilities are particularly advanced.

Before GPT-4o, ChatGPT’s Voice Mode used a combination of three separate models to transcribe audio to text, process the text, and convert the text back to audio. This process resulted in significant information loss, such as tone, multiple speakers, or background noises, and limited output capabilities to text only.

GPT-4o Training and Evaluations

GPT-4o was trained end-to-end on text, vision, and audio, meaning all inputs and outputs are processed by the same neural network. This model combining all these modalities is still in the early stages of exploration.

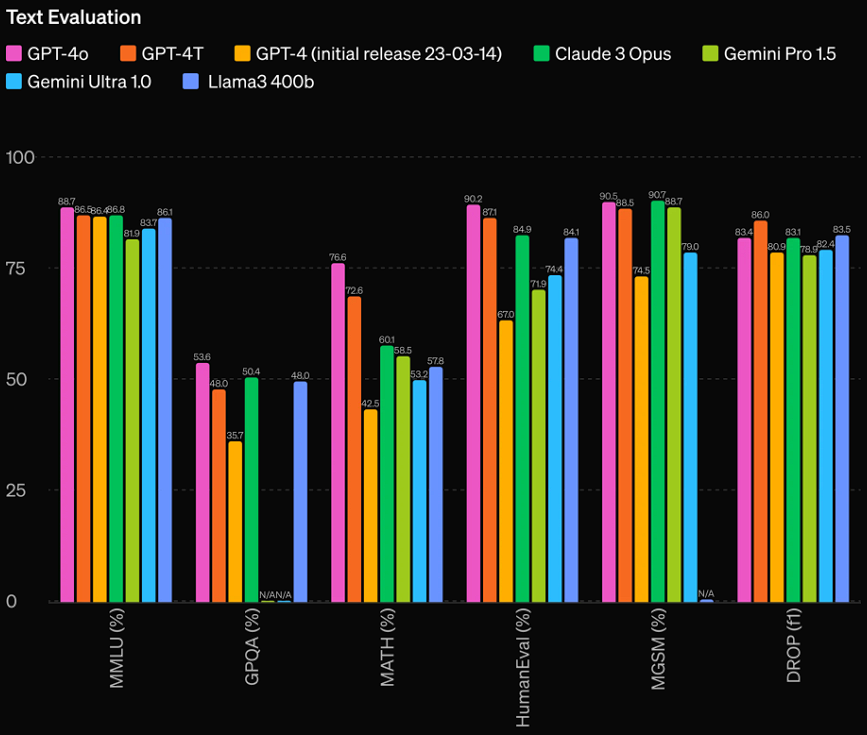

In performance evaluations, GPT-4o matches GPT-4 Turbo levels in text, reasoning, and coding while setting new benchmarks in multilingual, audio, and vision capabilities. In traditional benchmarks like MMLU, GPT-4o scored a high 88.7% on general knowledge questions.

Source: OpenAI

In speech recognition, GPT-4o significantly outperforms Whisper-v3 across all languages, especially in less common languages. On the M3Exam benchmark, which evaluates multilingual and vision capabilities, GPT-4o surpassed GPT-4 in all assessed languages.

GPT-4o Safety and Limitations

Safety was built into GPT-4o’s design through techniques like filtering training data and refining model behavior post-training. Safety evaluations show that GPT-4o does not exceed medium risk in categories such as cybersecurity, persuasion, and model autonomy. These evaluations were conducted using a combination of automated and human tests.

OpenAI also conducted extensive external testing with over 70 experts in various fields to identify risks associated with the new model modalities. These efforts resulted in the implementation of safety measures to enhance safe interactions with GPT-4o.

Availability and Access

GPT-4o is initially being made available to ChatGPT users, including the free tier and Plus users, with up to 5x higher message limits. The new Voice Mode version with GPT-4o will be released in alpha over the coming weeks.

Developers can also access GPT-4o via API as a text and vision model, with plans to support its audio and video capabilities for a select group of partners soon.

Conclusion

GPT-4o represents a significant step towards more natural and efficient human-computer interaction. With improvements in speed, cost, and multilingual capabilities, as well as a focus on safety, GPT-4o is poised to transform how we interact with artificial intelligence.